I remember back in February 2024, when OpenAI first released the Sora demo video. Like tech enthusiasts around the world, I was completely blown away by the stylish lady walking down the streets of Tokyo. At that moment, we all thought the "GPT-3.5 moment" for video generation had arrived.

However, the wait lasted over a year. It was not until September 30, 2025, that OpenAI finally released Sora 2 to the public.

During months of in-depth use, I generated hundreds of videos, ranging from surreal sci-fi scenes to photorealistic micro-movie clips. Does Sora 2 still lead the pack? Facing encirclement from Kling 2.6, Google Veo 3.1, and Alibaba's newly released Wan 2.6, can it hold onto its throne?

Today, I am bringing you this authentic, deep-dive review of Sora 2 from a first-person perspective.

First Impressions: The Core Evolution of Sora 2

When I first opened the Sora App, my most immediate feeling was: this is no longer just a "model," but a social product attempting to become the "AI version of TikTok." But as a creator, I care more about its core capabilities.

1. Physics Engine-Level Realism

What surprised me most about Sora 2 is its understanding of the physical world. I tried generating a scene of "a glass shattering in slow motion with red wine splashing." In early models, liquids often flew in defiance of gravity, or the trajectory of glass shards defied logic. But in Sora 2, the fluid dynamics were jaw-dropping, with light refraction on every droplet of splashing wine standing up to scrutiny. Although clipping still occasionally occurs in extremely complex interactions (like hands grasping objects), it has achieved near-perfection in lighting and material restoration.

2. Native Audio: Finally, No More Silent Films

Sora 2's native audio feature is one of the biggest upgrades this time. Previously, after generating a video, we had to find dubbing tools. Now, Sora 2 can understand the visual content and automatically generate synchronized sound effects. I generated a rainforest rain scene; not only did rain fall visually, but I could clearly hear the distinct textures of rain hitting leaves versus hitting the mud. This audio-visual integration experience instantly doubles creation efficiency.

3. Cameo Feature: The Savior of Character Consistency

For those wanting to make AI short dramas, character consistency has always been a nightmare. Sora 2 introduced the Cameo feature, allowing me to upload a reference image and reuse that character across different scenes. In testing, as long as the angles are not extreme, Sora 2 maintains facial features quite stably. This moves "AI cinema" from a concept to an executable reality.

Prompt tip: Mastering Sora 2 is not easy. If you find your generated videos are always missing the mark, I suggest using a specialized prompt assistant tool. I personally recommend this GPT: Sora 2 AI Video Generator GPT, which helps expand simple ideas into professional prompts that Sora can understand.

The Ultimate Showdown: Sora 2 vs Competitors (Kling 2.6, Veo 3.1, Wan 2.6)

The AI video generation field is currently a clash of titans. To visually demonstrate their differences, I conducted a series of side-by-side comparison tests.

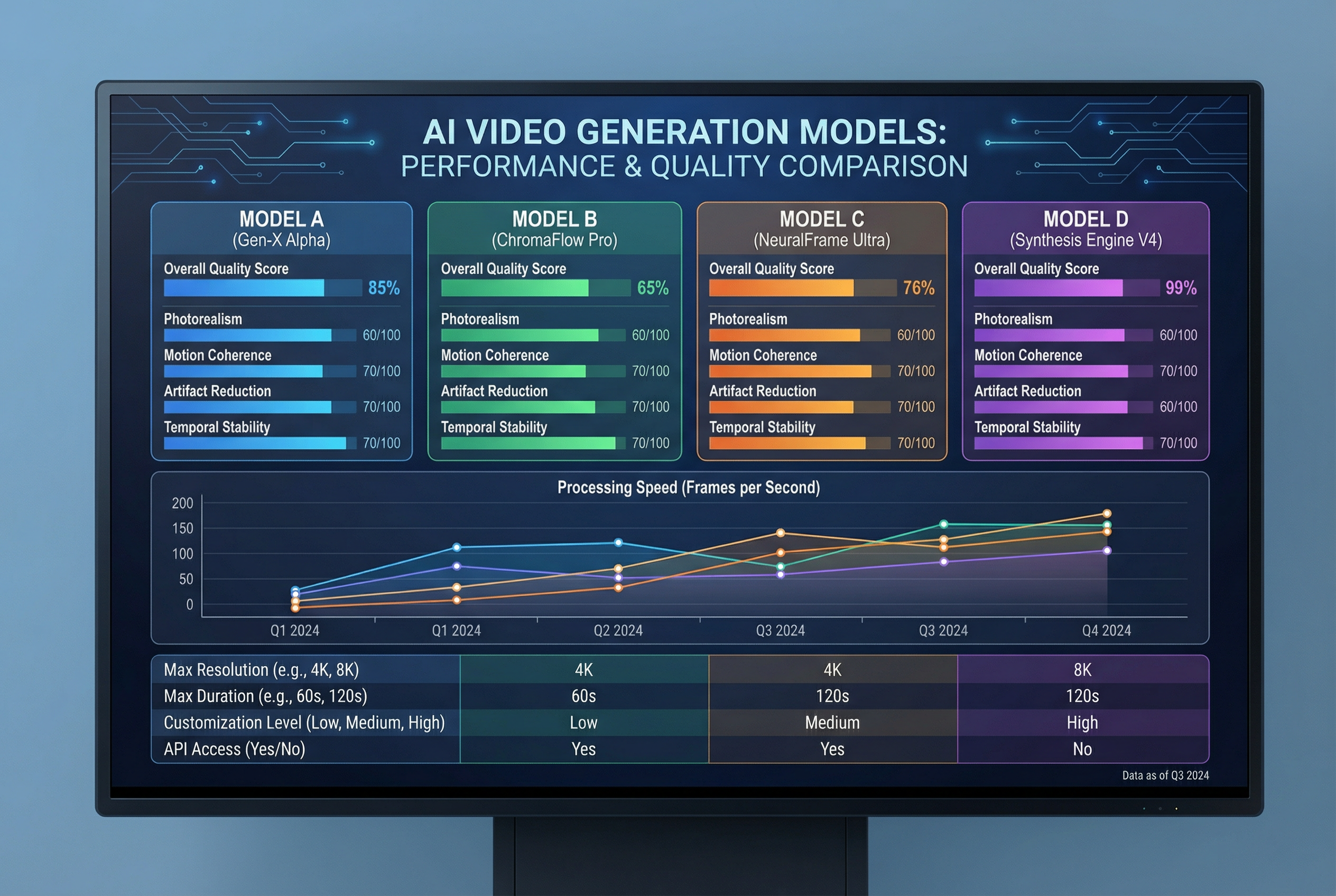

1. Core Specs and Features Comparison

Here is a comparison table of the four mainstream models based on my actual testing:

| Feature/Model | OpenAI Sora 2 | Kling 2.6 | Google Veo 3.1 | Wan 2.6 |

|---|---|---|---|---|

| Strengths | Physics simulation, surrealism, long takes | Character motion range, complex interactions | Cinematic lens feel, narrative flow | Mobile optimization, generation speed |

| Duration | Max 20s+ (extendable) | 5s / 10s (High Performance Mode) | 1 min+ (capable of long narrative) | 5-10s |

| Audio Gen | Native support (high sync rate) | Supported (decent effect) | Supported (focuses on ambient sound) | Currently weak |

| Consistency | Excellent (Cameo feature) | Good (needs tuning) | Excellent (enterprise-level control) | Good |

| Access | High (requires Plus/Pro and region locked) | Medium (web/app available) | High (mainly enterprise/YouTube) | Low (app readily available) |

2. Hands-on Feel and Analysis

Kling 2.6: The King of Motion

If your video involves a lot of large-scale character movements (like dancing or martial arts), Kling 2.6 remains the current king. In my tests, Sora 2 sometimes caused limb distortion during intense character movement, whereas Kling 2.6 handled it very smoothly. Moreover, Kling's lip-sync function performed exceptionally well when handling dialogue.

Google Veo 3.1: The Film Director's Choice

Veo 3.1 feels more like a professional cinematographer. It has a profound understanding of camera language (pan, tilt, zoom, dolly). If you need to generate a highly cinematic establishing shot or narrative segment, Veo 3.1's lighting adjustments often have more flavor than Sora 2.

Wan 2.6: The Mobile Dark Horse

Alibaba's recently released Wan 2.6 surprised me, especially its experience on mobile. Although it might slightly lag behind Sora 2 in extreme physical details, its generation speed is fast, and its portrayal of Eastern aesthetics is very on point, making it perfect for creating Asian-style content.

Thinking About Alternatives

While Sora 2 is powerful, the expensive subscription and unstable access have deterred many. If you are looking for a functional alternative that is easier to access, I recommend trying Sora 2 AI Video Generator. It integrates advanced video generation capabilities and is a cost-effective Sora 2 alternative for creators who cannot access OpenAI services directly or are on a budget.

Price War: Is Your Wallet Ready?

AI video generation is definitely a money-burning game. The pricing strategies of major manufacturers also reflect their target user groups.

| Model | Subscription Model | Est. Cost/Video | Notes |

|---|---|---|---|

| Sora 2 | ChatGPT Plus/Pro subscription | High | Daily free limit; extra cost for overage. Must buy membership plus quotas. |

| Kling AI | Credit system (daily login bonus) | Medium | Relatively friendly to free users; Pro membership has decent value. |

| Veo 3.1 | Mainly B2B API or YouTube integration | High | Aimed at professional agencies; hard for individuals to access cheaply. |

| Seadance AI | Flexible subscription | Low to medium | Offers more flexible plans, suitable for medium to light users. |

My advice: If you are a heavy user, Sora 2's Pro subscription (around the $200/month tier) is worth it for the high-definition output. If you are just testing the waters occasionally, Kling's daily free credits are sufficient.

Real-World Use Cases for Sora 2: What Can It Do?

After two months of tinkering, I summarized the most practical application scenarios for Sora 2 right now:

-

Ad pre-visualization: Previously, ad agencies had to draw storyboards before shooting. Now, they can use Sora 2 to generate dynamic animatics directly. Clients can understand the director's intended lighting and camera movement at a glance, reducing communication costs by 80 percent.

-

Social media short videos: The Sora 2 app itself is a community. Using its Remix feature, you can quickly re-process other people's video materials. For example, generating a cyberpunk style cat paired with dynamic AI music makes it very easy to gain traction on TikTok or Reels.

-

E-commerce product showcase: Although generating specific products (like a specific phone model) is not precise enough yet, generating atmospheric backgrounds is superb. For instance, generating a morning mist forest background video for a perfume product instantly elevates the texture.

-

Education and science: Imagine using video to directly demonstrate the process of cell division or a black hole devouring a star. Sora 2's physics simulation capabilities shine here.

Summary: Pros and Cons of Sora 2

Beyond the hype, Sora 2 is not perfect.

Pros

- Physics simulation ceiling: Its understanding of lighting, fluids, and collisions remains the industry benchmark.

- Ecosystem integration: Writing scripts with ChatGPT, generating references with DALL-E 3, and finally generating video with Sora. OpenAI's ecosystem loop is powerful.

- Native audio: Saves the hassle of post-production dubbing.

- Disney backing: With Disney's investment and permission to use its IP (like Star Wars and Marvel characters), Sora 2 has potential in fan creation.

Cons

- The gacha experience: Sometimes to get a perfect shot, I need to generate it 10 times, which means wasted computing power and money.

- User retention doubts: Data shows Sora App retention is not high. For ordinary users, watching is easy, but creating via prompts still has a learning curve, and it is easy to quit once the novelty wears off.

- Strict content censorship: For safety reasons, Sora 2 has many restrictions on copyright and sensitive content, which limits creative freedom to some extent.

Final Verdict: Which One Should You Choose?

As we reach the end of 2025, AI video generation is no longer a one-horse race but a hundred flowers blooming.

-

If you seek the ultimate visual logic and physical realism, or are a loyal OpenAI ecosystem user, Sora 2 is still your first choice. Do not forget to use Sora 2 GPTs to improve your success rate.

-

If you care about character motion performance, or mainly create dance or action short videos, China's Kling 2.6 might give you a better experience than Sora.

-

If you are a mobile user wanting to create anytime, anywhere, try Wan 2.6 or Seadance AI. Their entry threshold is lower, and feedback is more instant.

The future of AI video is here. Tools are just brushes; the real core remains the story you want to tell in your mind. Now, go generate your first masterpiece.